There is a widely held belief that because math is involved, algorithms are automatically neutral.

This widespread misconception allows bias to go unchecked, and allows companies and organizations to avoid responsibility by hiding behind algorithms.

This is called Mathwashing.

When power and bias hide behind the facade of 'neutral' math.

This is how it works:

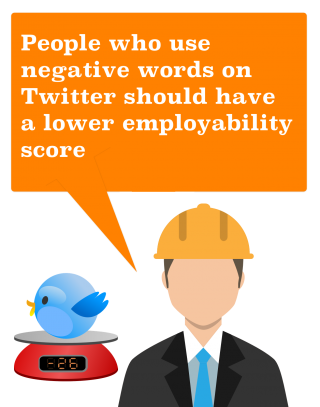

ACCIDENTAL

When good intentions are combined with a lack of knowledge and naive expectations.

See also: Filterbubbles.

There are two types:

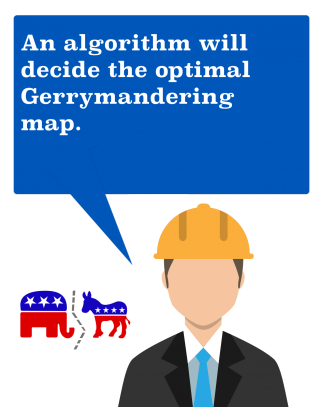

ON PURPOSE

Because people don't question decisions from a "neutral" algorithm, this faith can be abused.

Facebook: "We are just a platform".

There are 2 things you should realize:

1.

PEOPLE DESIGN ALGORITHMS

They make important choices like:

Which data to use..

How to weigh it..

2.

Data is not automatically objective either.

Algorithms work on the data we provide. Anyone that has worked with data knows that data is political, messy, often incomplete, sometimes fake, and full of complex human meanings.

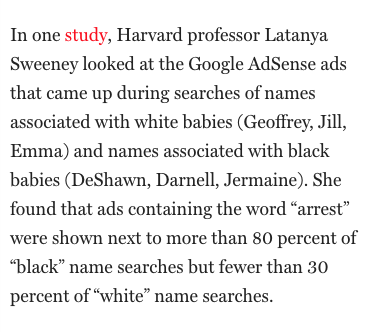

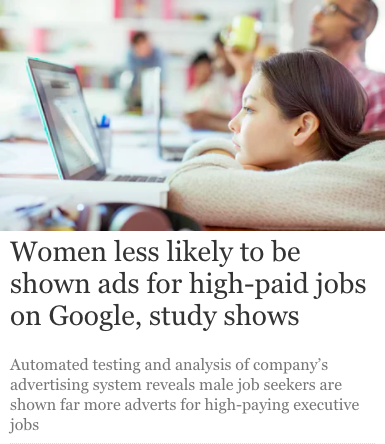

Even if you have 'good' and 'clean' data, it will still reflect societal biases:

NEW TECHNIQUES LIKE "MACHINE LEARNING" ARE MAKING MATHWASHING A BIGGER PROBLEM:

ACCIDENTAL

These learning algorithms teach themselves to clasify things by looking at huge amounts of example data.

This data will reflect current societal inequalities, like women getting paid less than men, which the algorithms will consider the norm.

Algorithms pick up on current inequalities, and amplify them.

"Old-boys Algorithms" create feedback loops that perpetuate current inequalities.

ON PURPOSE

Even their designers cannot exactly explain how self-learning parts of their algorithms make their final decisions. This offers a clever path to avoiding responsibility.

Algorithms can evaporate accountability.

"The algorithm did it"